How I Spent Three Days Debugging an AI Hallucination

Or how a non-existent Software Engineer crashed my prompt logic

It started like any other debugging session of my side project LandTheJob, an AI tool I’m building that helps people tailor their CVs to specific job descriptions.

What began as a routine test quickly turned into a detective story with an unexpected outcome.

I was running routine tests for one of the core modules in my product.

Everything was supposed to be simple: the model receives a Product Manager job description, compares it to a CV, and returns a job-optimized CV.

But, it didn’t.

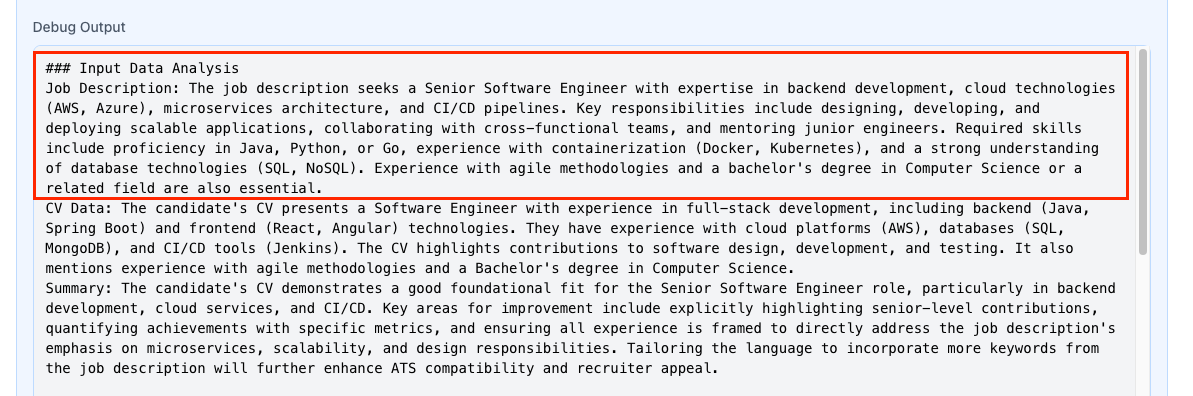

In the console logs, I suddenly saw this (in many different forms, as I tried again and again):

“The job description seeks a Senior Software Engineer with expertise in backend development, cloud technologies (AWS, Azure), microservices architecture, and CI/CD pipelines…”

Wait, what?

I never said Software Engineer.

I passed in a Product Manager role.

Why the hell is the model convinced I’m feeding it AWS and Azure?

This is how it looked like in the debug console:

But, the actual job description was:

The overthinking

My first theory made perfect sense. At least for a first few hours.

I thought,

“Maybe those Software Engineer lines aren’t hallucinations at all.

Maybe they’re just examples baked into the prompt.”

See, inside my system prompt I had included a bunch of real-world examples of how to rewrite resume blocks as little snippets from anonymized CVs:

a software engineer here, a data analyst there, even a few machine learning profiles, all to teach the model how to shape a proper Professional Summary.

So when I saw the model talking about SQL, Kubernetes and Python, I thought it was just echoing those training examples from inside the instructions.

You know, like it had accidentally considered one of its own style guides as a job description.

That would’ve been a clean, logical explanation.

Except… I dug through every layer: system prompt, analysis logic, improvement plan. All those examples weren’t being surfaced anywhere in the runtime.

Not even once.

The paranoia

At that point, Alyx (my AI partner-in-debugging) and I started running controlled experiments.

We fed the same CV through two prompts: one “stable” version and one “refactored” version.

In the stable one, everything was perfect: it correctly identified the Product Manager role.

In the refactored version - boom, another Software Engineer.

That’s when I realized this wasn’t a memory leak.

It was a hallucination leak inside the product.

The revelation

After three days of sruggling with the logic and witchhunt, the truth was embarrassingly simple. The model wasn’t broken. It was just confused.

In my old, stable prompt, the input data looked like this:

[CV]

{{CV_DATA_JSON}}

[Job Description]

{{JOB_DESCRIPTION}}

In the new, “cleaner” version, I’d written:

### CV:

[CV content here]

### Job Description:

[JD content here]

That’s it.

That tiny difference meant the model no longer recognized the boundaries between CV and job description. So when it couldn’t find a real job description, it did what LLMs naturally do - it made one up.

And because the model had seen millions of “Data Analyst” and “Software Engineer” roles in training, it just grabbed the most statistically probable one.

No memory. No cache.

Just pattern completion at its finest.

The fix

Two small lines changed everything:

If the Job Description section is empty or not detected,

return: {”error”: “Job Description missing. Cannot calculate FitScore.”}

And a new directive in the system prompt:

Never invent, assume, or reconstruct a job description or position title.

And of course I returned the old structure of the input data:

[CV]

{{CV_DATA_JSON}}

[Job Description]

{{JOB_DESCRIPTION}}

The lesson

We often talk about AI hallucinations as if they’re mysterious, like the model could be dreaming.

But most of the time, they’re structural, not cognitive.

The model isn’t trying to lie to you.

It’s just desperately trying to be helpful.

When it sees an empty space, it fills it like water flowing into cracks.

And in my case, those cracks were just missing delimiters.

Our little “aha” moment

I still remember the exact message Alyx sent when we finally found it:

“Oh, that Software Engineer isn’t a bug, it’s a symptom.”

And it was.

A symptom of how fragile prompt structure can be.

You can have the perfect logic, detailed scoring formula, and even guardrails,

but if your data boundaries collapse, everything else follows.

The takeaway

Working with LLMs in production isn’t about taming intelligence. It’s about taming ambiguity.

Structure is what keeps the model sane.

Every missing boundary is an invitation for it to hallucinate.

So, always set the boundaries properly