How A/B Testing Helps You Build Better Products

A/B testing in IT is a process of creating several user groups and testing different functionality on them. One of the group continues using the existing functionality (control group), while the second group (third, fourth etcetera) gets a new feature.

At the same time you begin collecting metrics for the group A and group B. These metrics vary depending on the functionality you are testing, for example how many users have clicked on the modified UI element compared to the existing one.

In some cases you might have more than two variants in order to get more diverse results, and conduct A/B testing faster.

You must also try to understand whether the changes in the observed metric between the groups A and B are caused by testing itself or not. To do this, you should conduct at least several randomized A/B tests.

When and Why You Should Run A/B Testing

You should conduct A/B testing when you want to understand if making changes to a tested group (for example, offering an updated registration flow) will lead to changes in the outcome.

It is often conducted to optimize specific metrics, such as conversion rate, click-through rate, or user satisfaction.

A/B testing is your proof that the changes really occur when comparing the control group with the test groups.

Sometimes, even the smallest changes in the measured metrics may have a significant impact. For example, improving website loading speed by a few milliseconds may significantly increase revenue.

Also, A/B testing may lead to unexpected results beyond the metrics measured, providing you with valuable information.

For example, if you were conducting a test of a new Buy button, the main metric — number of clicks — might remain almost the same. However, you may find that users start complaining about the new button, as it worsens their experience.

Of course, it’s not always that the “B” Buy button is worse than the “A” Buy button; it might even be better overall. People may simply be accustomed to the old one and reluctant to change their behavior. This is one of the obvious issues with A/B testing.

Necessary Components of A/B Testing

First of all, you should have your test subjects to conduct A/B testing, i.e. users and be able to split them into two or more test groups. To make testing accurate there should be little or no interference between the users.

Second, the number of users should be sufficient for testing. Without enough users, the results may lack statistical significance, making it difficult to draw reliable conclusions. This means you need a sample size large enough to detect meaningful differences between the test groups.

You should remember that it is harder to detect smaller changes than the larger ones, but still they might be considerable.

And finally, you must define metrics that will be used to measure the results of testing. For example, measuring user engagement could be a good metric.

What Does A/B Testing usually show

This is a great observation, and I want to share more about it. We create features thinking that they will be useful. However, in reality, the majority of features do not improve the metrics they were intended to.

Successful Case Studies of A/B Testing

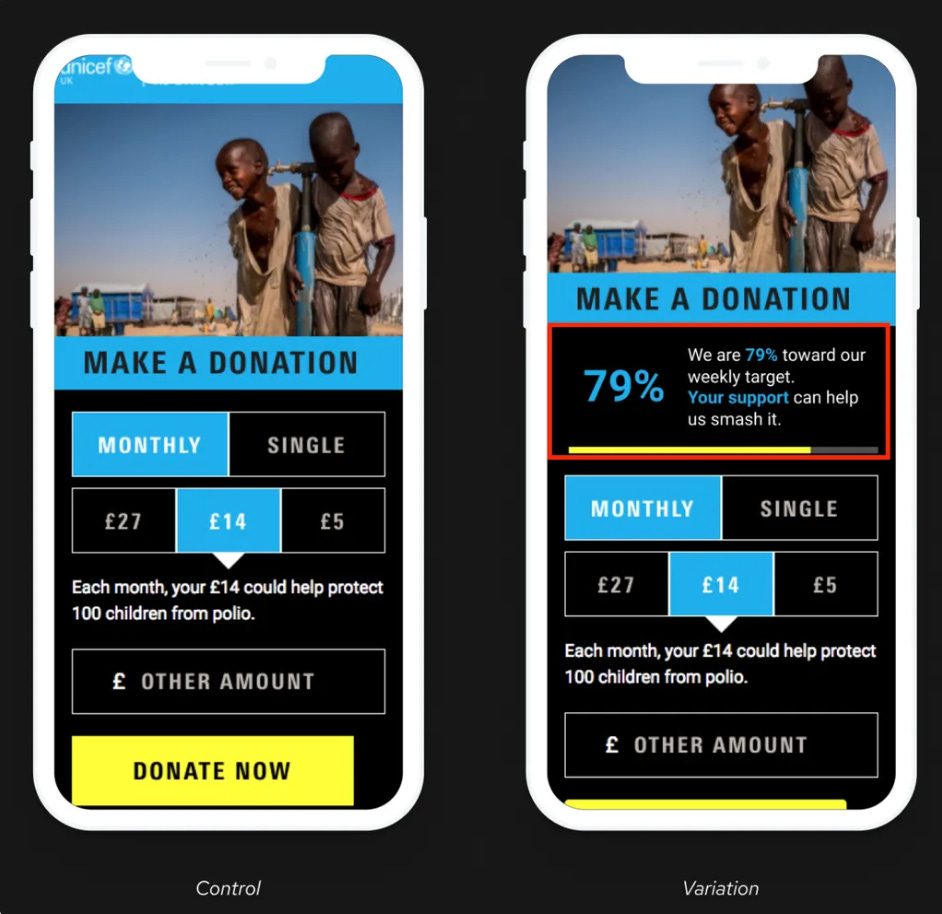

I have taken these examples from Conversion.com. You can clearly see that even slight changes can yield unexpected (and often positive) results. In these particular cases, the modifications were made to website elements.

Changes to the ‘total look’ Idle screen displays, resulted in a 51% increase in interaction rate. Source.

Implementing the softer call-to-action on the ‘our vehicles’ pages – increased the number of leads by 46%. Source.

With introduction of the weekly target progress bar, the average size of single donations increased by 51%. Source.